background

Nginx-kafka-module is a plug-in of nginx. kafka can be integrated into nginx, which facilitates the collection of embedded data of front-end pages in web projects. For example, if embedded points are set on the front-end pages, some access and request data of users can be directly sent to kafka through http requests, and the back-end can make real-time calculations by consuming messages in kafka through programs. For example, using sparkstream to consume data in kafka in real time to analyze users' pv,uv, some behaviors of users and funnel model conversion rate of pages, so as to better optimize the system or make real-time dynamic analysis of visiting users.

Specific integration steps

Step 1 install git

yum install -y git

2. Switch to the /usr/local/src directory, and then clone kafka's C client source code to local.

cd /usr/local/srcgit clone https://github.com/edenhill/librdkafka

3. Enter librdkafka and compile it.

cd librdkafkayum install -y gcc gcc-c++ pcre-devel zlib-devel./configuremake && make install

4. Install the plug-in for integrating kafka with nginx, and enter /usr/local/src, and clone nginx to integrate the source code of kafka.

cd /usr/local/srcgit clone https://github.com/brg-liuwei/ngx_kafka_module

5. Enter the source package directory of nginx (compile nginx, and then compile the plug-in at the same time)

cd /usr/local/src/nginx-1.12.2./configure --add-module=/usr/local/src/ngx_kafka_module/make && make install

6. Modify nginx's configuration file: set a topic for location and kafaka.

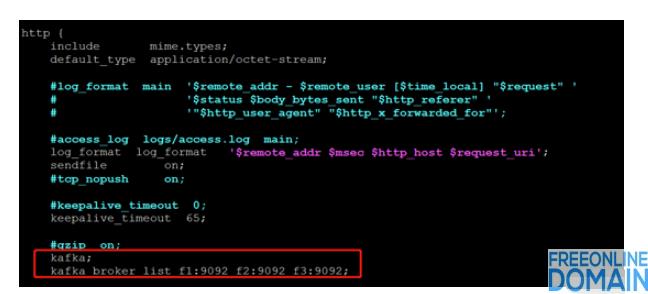

# Add configuration (2 places) kafka; kafka_broker_list f1:9092 f2:9092 f3:9092; location = /kafka/access {kafka_topic access888; }

As shown below:

7. Start zk and kafka cluster (create topic)

zkserver.sh startkafka-server-start.sh -daemon config/server.properties

8. Start nginx. Error reported. kafka.so.1 file cannot be found.

error while loading shared libraries: librdkafka.so.1: cannot open shared object file: no such file or directory

9. load the so library

# Boot and load the library echo "/usr/local/lib" > >/etc/ld.so.conf # Load ldconfig manually.

10. Test, write data into nginx, and then observe whether kafka consumers can consume the data.

Curl http://localhost/Kafka/access-d "Message send to Kafka topic" Curl http://localhost/Kafka/access-d "Xiaowei 666" test.

You can also simulate the page burying request interface to send information:

Background kafka consumption information is shown in the figure below:

Copyright Description:No reproduction without permission。

Knowledge sharing community for developers。

Let more developers benefit from it。

Help developers share knowledge through the Internet。

Follow us