Summary of the use of tomcat combined with nginx I believe many people have heard of nginx, which is a small thing slowly devouring the share of apache and IIS. So what exactly does it do? Many people may not understand it.

Speaking of reverse proxy, many people may have heard of it, but what is reverse proxy is not clear to many people. Extract a description from Baidu Encyclopedia:

Reverse Proxy means that the proxy server accepts the connection request on the internet, then forwards the request to the server on the internal network, and returns the result from the server to the client requesting the connection on the internet. At this time, the proxy server appears as a server to the outside.

It is very straightforward here. The reverse proxy mode is actually a proxy server responsible for forwarding, which seems to act as a real server, but in fact it is not. The proxy server only acts as a forwarding function and obtains the returned data from the real server. In this way, in fact, this is what nginx has accomplished. We asked nginx to listen to a port, such as port 80, but in fact we forwarded it to tomcat at port 8080, which handled the real request. When the request was completed, tomcat returned, but the data was not returned directly at this time, but was returned directly to nginx. Here, we would think that nginx handled it, but actually tomcat handled it.

Speaking of the above way, maybe many people will think of it again, so that static files can be handed over to nginx for processing. Yes, many places where nginx is used are used as static servers, so it is convenient to cache those static files, such as CSS, JS, html, htm and so on.

Without further ado, let's take a look at how nginx is used.

1) Of course, the software to be used should be downloaded. To nginx, official website. Next. The version I am using now is 1.1.7, but basically all future versions are compatible, and what we use does not involve too low level, so there should be no change.

Here, because mine is windows, of course, I downloaded the windows version. After downloading, start it first. Enter the nginx folder and just start nginx.

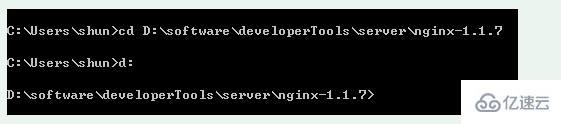

For example, I downloaded it and put it in D: Softwared Developertoolserver. Ginx-1.1.7, CD D after direct cmd: Softwared Developertoolserver Ginx-1.1.7, some people who are not used to the command line may be surprised that it did not go to that folder. Windows does not jump between partitions unless you specify it yourself. So we have to go straight to D: As follows:

At this time, when we open the task manager, we can see two nginx.exe there. This shows that we have already started. As for why the two have started, we won't delve into them here.

At this time, when we open the task manager, we can see that the two nginx.exe processes are fine there. This shows that we have already started. As for why the two have started, we won't delve into them here.

Now that we have started nginx, we can start tomcat, thinking that we can directly access tomcat by directly accessing http://localhost.

Don't worry, let's take a look at what nginx looks like after startup. Visit http://localhost directly to see:

We can see that nginx started successfully, and now the access is directly into the directory of nginx.

So where are these actually deployed? This involves an important configuration file nginx.conf

2) we can see that there is a conf folder in the nginx folder, which contains several files. Let's open nginx.conf and see a paragraph.

This code is in the server, which is equivalent to a proxy server. Of course, more than one can be configured.

Let's analyze it carefully:

Listen: indicates the port on which the current proxy server listens. By default, it listens to port 80. Note that if we configure more than one server, this listen should be configured differently, otherwise we can't be sure where to go.

Server_name: indicates where to go after listening. At this time, we go directly to the local area, and at this time, we go directly to the nginx folder.

Location: indicates the matching path. At this time, it is configured/indicates that all requests are matched here.

Root: root is configured in it, which means that when the requested path is matched, the corresponding file will be searched in this folder, which is very useful for our static file servo in the future.

Index: When no home page is specified, the specified file will be selected by default. There can be multiple files and they will be loaded in sequence. If the first one does not exist, the second one will be found, and so on.

The following error_page is the page that represents the error, so we don't need it for the time being, so let's leave it alone.

Then we know the specific configuration, how to make it go to tomcat when accessing localhost. In fact, just modify two places:

1 server_name localhost:8080; 2 3 location / { 4 proxy_pass http://localhost:8080 5 }

We have modified the above two places. My tomcat is at port 8080, which can be modified according to our own needs. Here is a new element proxy_pass, which represents the proxy path, which is equivalent to forwarding, unlike the previous statement that root must specify a folder.

At this point, we modified the file. Does it mean that nginx must be shut down before restarting? Actually, it is not necessary. nginx can reload the file.

We run directly:

Happy too early, we found a mistake:

What is it? I found an error in line 45, but I didn't want to find it in that line. So we looked carefully and found that the proxy_pass we joined was very strange and didn't exist. At the end of the number, this is the problem, modify it directly, and then run it again. It is found that there is no error, and it is OK.

If you don't want to load directly, but just want to see if there is any problem with your configuration file, you can directly enter:

This can check whether there is an error in the configuration file. All our modifications below assume that we run nginx -s reload to reload the configuration file after the modification is completed. Please note.

Everything is all right, and then we reopen http://localhost, and we see the following page:

At this time, we found that it is not the welcome page just now, but the management page of tomcat. No matter what link we click, it is no problem, which is equivalent to directly visiting http://localhost:8080.

3) We directly tried a small example above, and let nginx forward it, that is, the so-called reverse proxy. But in fact, our demand will not be like this. We need to filter by file type. For example, jsp is directly handled by tomcat, because nginx is not a servlet container, and there is no way to handle JSP, while html,js,css and CSS do not need to be handled, so nginx is directly cached.

Let's configure the JSP page directly to tomcat, while some pictures such as html,png and JS are directly cached to nginx.

At this time, the most important element is location, and it involves some regularization, but it is not difficult:

1 location ~ .jsp$ { 2 proxy_pass http://localhost:8080; 3 } 4 5 location ~ .(html|js|css|png|gif)$ { 6 root D:/software/developerTools/server/apache-tomcat-7.0.8/webapps/ROOT; 7 }

We need to remove the previously allocated location/to avoid all requests being intercepted.

Then let's look at http://localhost.

When we don't specify a jsp page, it will appear that it can't be found, because there is no corresponding location match at this time, so there will be a 404 error, so we jump to the error page defined by nginx.

And when we visit with http://localhost/index.jsp, we see the familiar page:

Moreover, all the pictures are displayed normally. Because the pictures are png, we can directly search in the tomcat/webapps/ROOT directory. Of course, if we click the link of Manager Application HOW-TO, we find that:

It still can't be found. Why? Because this is an html page, but it is not in the ROOT directory, but in the docs directory, but when we match the html, we look in the ROOT directory, so we still can't find this page.

Under normal circumstances, if we need to use nginx for static file servo, we usually put all static files, such as html,htm,js,css, etc. in the same folder, so there will be no such situation as tomcat, because tomcat belongs to different projects, so we can't do anything about it.

3) Some people will say that all these will only find one server, but what if we want to automatically find another server when one server hangs up? This is actually taken into account by nginx.

At this time, the proxy_pass we used before is of great use.

Let's modify the first example before, that is, all the agents:

The final revision is as follows:

1 upstream local_tomcat { 2 server localhost:8080; 3 } 4 5 server{ 6 location / { 7 proxy_pass http://local_tomcat; 8} 9 # ... Other omitted 10}

We added an upstream outside the server, and directly used the name of http://+upstream in proxy_pass.

Let's go directly to http://localhost, and it still has the same effect as the first one. All the links are fine, which means that we are configured correctly.

The server element in upstream must be noted, and http:// cannot be added, but it must be added in proxy_pass.

We just said that we can connect to another server when one server is down. How can we do that?

In fact, it is very simple. Configure one more server in local_tomcat in upstream. For example, I now have one more jetty with the port of 9999, so our configuration is as follows:

1 upstream local_tomcat { 2 server localhost:8080; 3 server localhost:9999; 4 }

At this point, we close tomcat and only open jetty. Let's run http://localhost to see the effect:

We saw that it requested jetty's page, but because of jetty's mechanism, jetty's home page was not displayed at this time, so let's leave it alone. But our function of automatically using another server when one server hangs up has been realized.

But sometimes we just don't want to visit another server when it is hung up, but we just want one server to have a greater chance of accessing than the other. This can be specified by adding a weight= number at the end of the server. The larger the number, the greater the chance of requesting.

1 upstream local_tomcat { 2 server localhost:8080 weight=1; 3 server localhost:9999 weight=5; 4 }

At this time, we gave jetty a higher weight, which made it more accessible. In fact, when we refreshed http://localhost access, we found that jetty had a much higher access probability, and tomcat had almost no access. Generally, if we have to use it this way, don't be too related, so as not to overload a server.

Of course, the server has some other elements, such as down indicating that the server is not needed for the time being, and so on. These can refer to nginx's wiki. Maybe it's written a lot, and some people will have problems, so how can nginx be closed? This is a problem. In fact, you can shut it down by running nginx -s stop directly.

Copyright Description:No reproduction without permission。

Knowledge sharing community for developers。

Let more developers benefit from it。

Help developers share knowledge through the Internet。

Follow us