Information collection mainly collects configuration information of servers and sensitive information of websites, including domain name information, subdomain information, target website information, real IP of target website, directory files, open ports and services, middleware information, scripting language and so on. Based on the experience of experts, the rookie has summed up eight methods of information collection, but there are still some shortcomings. Welcome your advice and correction. In my opinion, the key to success lies in using convenient tools, having a reliable pool of IP agents, accumulating rich daily dictionaries, using clear and understandable mind maps, and accumulating experience through many practices.

A collection of domain name information 1.whois query

Whois (pronounced as Who is, not abbreviation), a standard internet protocol, is a transmission protocol used to query the IP and owner of domain names. Simply put, it is a database used to query whether a domain name has been registered and the detailed information of the registered domain name (such as domain name owner and domain name registrar).

Whois is used to query the domain name information. In the early days, whois queries mostly existed in command line interface, but now there are some online query tools with simplified web interface, which can query different databases at once. The query tool of web interface still relies on whois protocol to send query requests to the server, and the tool of command line interface is still widely used by system administrators. Whois usually uses TCP protocol port 43. Whois information of each domain name /IP is kept by the corresponding management organization.

Whois query we mainly focus on registrars, registrants, emails, DNS resolution servers, and registrants' contact numbers.

At present, the common query method is mainly through third-party platforms such as webmaster tools. Of course, you can also query registered domain names from domain name registrars, such as China Wanwang (Alibaba Cloud), Western Digital, New Network, Nano Network, China Resources, Sanwu Interconnection, New Network Interconnection, Mei Orange Interconnection, Ai Ming Network, Yi Ming Network and so on. You can also inquire through your own registered agency.

The query addresses of domain name WHOIS information of major registrars and third-party webmaster tools are as follows:

China wanwang domain name WHOIS information inquiry address: https://whois.aliyun.com/

Western digital domain name WHOIS information inquiry address: https://whois.west.cn/

New website domain name WHOIS information inquiry address: http://whois.xinnet.com/domain/whois/index.jsp

Naming domain name WHOIS information inquiry address: http://whois.nawang.cn/.

China Resources Domain WHOIS Information Inquiry Address: https://www.zzy.cn/domain/whois.html

35 Internet domain name WHOIS information inquiry address: https://cp.35.com/chinese/whois.php

New Internet Domain Name WHOIS Information Inquiry Address: http://www.dns.com.cn/show/domain/whois/index.do

Meicheng Internet Domain WHOIS Information Inquiry Address: https://whois.cndns.com/

Ai Ming domain name WHOIS information inquiry address: https://www.22.cn/domain/

Easy name domain name WHOIS information inquiry address: https://whois.ename.net/

The following is the third-party query address of webmaster tools (some website registrant information will be hidden or prompted to contact the domain name registrar to obtain it, you can go to who.is to check it out)

Kali's query: whois -h registered server address domain name

Webmaster Tools-Webmaster Home Domain WHOIS Information Inquiry Address: http://whois.chinaz.com/

Love stand domain name WHOIS information inquiry address: https://whois.aizhan.com/

Tencent Cloud Domain Name WHOIS Information Inquiry Address: https://whois.cloud.tencent.com/

Who.is abroad: https://who.is/

Micro steps: https://x.threatbook.cn/

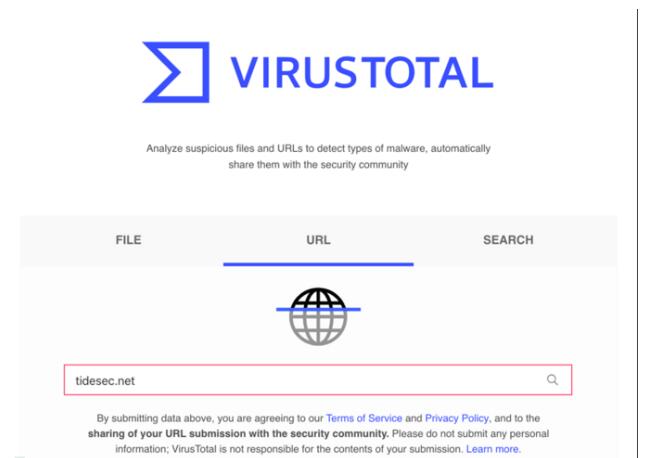

Virus Total:https://www.virustotal.com

There are also whois queries and some integration tools that come with Kali.

2. Filing information query

Website filing information is filed by the website owner to the relevant state departments according to the provisions of national laws and regulations. It is a way for the Ministry of Information Industry to manage websites, in order to prevent illegal website business activities on the Internet, and of course it is mainly aimed at domestic websites.

For filing inquiry, we mainly pay attention to: company information such as name, filing number, website owner, legal person, e-mail, contact number, etc.

Common websites for querying filing information are as follows:

Tianyancha: https://www.tianyancha.com/

ICP filing inquiry network: http://www.beianbeian.com/

National Enterprise Credit Information Publicity System: http://www.gsxt.gov.cn/index.html

Love stand's filing inquiry: https://icp.aizhan.com.

Second, the collection of subdomains subdomains, that is, secondary domain names, refers to the domain names under the top-level domain names. If we collect more subdomains, then we have more test targets, and the possibility of successfully infiltrating the target system will increase. Subdomain name is a good breakthrough when the main station is impeccable. There are four common methods.

1. Detection tools

There are many detection tools, but it is important to improve the dictionary every day, and a strong dictionary is the last word. Common ones are

Layer subdomain excavators, subDomainsBrute, K8, orangescan, DNSRecon, Sublist3r, dnsmaper, wydomain, etc., with emphasis on layer subdomain excavators (simple to use and meticulous interface), Sublist3r (listing domain names found under multiple resources) and subDomainsBrute. This tool can be downloaded through GitHub, and there are available instructions for use.

The link is as follows:

SubDomainBrute: https://github.com/lijiejie/subDomainsBrute

Sublist3r: https://github.com/aboul3la/Sublist3r

Layer (enhanced version 5.0): https://pan.baidu.com/s/1Jja4QK5BsAXJ0i0Ax8Ve2Q password: aup5.

Https://d.chinacycc.com (recommended by the boss) said that it works well, but it costs money. )

2. Search engines

Search engines such as Google, Bing, shodan and Baidu can be used for search query (site:www.xxx.com).

Google search syntax: https://editor.csdn.net/md/? articleId=107244142

Bing search grammar: https://blog.csdn.net/hansel/article/details/53886828

Baidu search grammar: https://www.cnblogs.com/k0xx/p/12794452.html

3. Enumeration of third-party aggregation applications

Third-party services aggregate a large number of DNS data sets and use them to retrieve subdomains of a given domain name.

(1)VirusTotal: https://www.virustotal.com/#/home/search

(2)DNSdumpster: https://dnsdumpster.com/

4. SSL certificate query

SSL/TLS certificates usually include domain name, subdomain name and email address, which are the information we need to obtain. Usually CT is a project of CA, and CA will publish each SSL/TLS certificate to public logs. The easiest way to find the certificate of domain name is to use search engines to search some public CT logs.

The main websites are as follows:

(1) https://crt.sh/

(2) https://censys.io/

(3) https://developers.facebook.com/tools/ct/

Please provide a sentence that needs to be rewritten. This link is not a complete sentence.

5. Online website query (relatively less used)

(1)https://phpinfo.me/domain/ (inaccessible)

(2) http://i.links.cn/subdomain/ (inaccessible)

(3) http://dns.aizhan.com

(4) http://z.zcjun.com/ (quick response, recommended)

(5)Github searches subdomains.

Third, the ip address is essential in the real ip collection information collection project. In the domain name collection project, we have collected IP segments. whois, ping test and fingerprint websites can detect IP addresses, but many target servers have CDNs. What is a CDN? What if we bypass the search for real IP?

Content Delivery Network(CDN) refers to a network for distributing content. CDN is an intelligent virtual network based on the existing network. It relies on the edge servers deployed in various places, and enables users to obtain the required content nearby through the functional modules such as load balancing, content distribution and scheduling of the central platform, and only responds from the remote web server during actual data interaction, thus reducing network congestion and improving the response speed and hit rate of users. The key technologies of CDN mainly include content storage and distribution technology.

Determine whether there is a cdn.

(1) It is very simple. Use various multi-Ping services to check whether the corresponding IP address is unique. If not, CDN is mostly used. The multi-ping websites are: http://ping.chinaz.com/http://ping.aizhan.com/.

(2) Use nslookup for detection. The principle is the same as above. If the returned domain name resolves multiple IP addresses, CDN is mostly used. There are examples of CDN:

Example without CDN:

There are many ways to bypass cdn. The reference link is as follows: https://www.cnblogs.com/qiudabai/p/9763739.html.

One thing to mention is to bypass the cloud cdn and fofa's title search (check the source code to get the title), and you can find the ip addresses of many cdn cache servers. Some cdn cache servers are transmitted by region, and the databases are synchronous. If you can access them directly, you can bypass the cloud waf for some operations such as scanning and injection.

Here are some C-side and side-by-side scanning websites and tools:

http://www.webscan.cc/

Https://phpinfo.me/bing.php (probably not accessible)

Artifact: https://github.com/robertdavidgraham/masscan

Royal Sword 1.5: https://download.csdn.net/download/peng119925/10722958

C-side query: IIS PUT Scanner (fast scanning speed, user-defined port, banner information)

The four-port test tests the port of the real IP address corresponding to the domain name of the website. Many websites are protected from large-scale scanning and vulnerability testing, but if the cdn finds the real website, it can be scanned in large quantities.

Common tools are nmap (powerful), masscan, zmap and Royal Sword tcp port high-speed scanning tools (faster), and some online port scanning. http://coolaf.com/tool/port、 https://tool.lu/portscan/index.html

Refer to the idea of Great God: We can collect the ip corresponding to the subdomain and sort it into txt, and then nmap batch port scanning, service blasting and vulnerability scanning, provided that IP is not blocked, proxy pool can be used.

Nmap-ilip.txt-script = auth, vuln > finalscan.txt scans and exports common ports and vulnerabilities.

Common port descriptions and attack directions are sorted into personal blog according to the book web attack and defense: https://blog.csdn.net/qq_32434307/article/details/107248881.

V. website information collection Website information collection mainly includes: operating system, middleware, scripting language, database, server, Web container, waf, cdn, cms, historical vulnerabilities, dns regional transmission, etc. You can use the following methods to query.

Common fingerprint tools: royal sword web fingerprint identification, lightweight web fingerprint identification, whatweb, etc.

(1) Common website information identification websites:

Tidal fingerprint: http://finger.tidesec.net/ (recommended)

Yunxi (invitation code is needed now): http://www.yunsee.cn/info.html.

CMS Fingerprint Identification: http://whatweb.bugscaner.com/look/

Third-party historical vulnerability database: Wuyun, seebug, CNVD, etc.

(2)Waf identification: https://github.com/EnableSecurity/wafw00f

Kali comes with wafw00f, which can be used directly by one command. It is recommended to use it under kali, and it is very troublesome to use it under windows. Nmap also contains a script module to identify waf fingerprints.

(3)Dns zone transmission vulnerability, through which we can find:

1) the topology of the network, IP address segments in the server set.

2) the IP address of the database server, such as the above-mentioned nwpudb2.nwpu.edu.cn.

3) Test the IP address of the server, such as test.nwpu.edu.cn.

4)VPN server address leakage

5) Other sensitive servers

The specific reference links are as follows:

http://www.lijiejie.com/dns-zone-transfer-1

https://blog.csdn.net/c465869935/article/details/53444117

It is an important environment to detect web directories and hidden sensitive files in the attack and defense test of six sensitive directory files collection, from which you can obtain the background management page, file upload interface, backup files, WEB-INF, robots, svn and source code of the website.

Mainly through tool scanning, mainly including

(1) Royal Sword (there are many enhanced dictionaries on the Internet)

(2) 7 KB Storm https://github.com/7kbstorm/7kbscan-WebPathBrute (3) Search engines (Google, baidu, bing, etc.), and it is also common for search engines to search for sensitive files, generally like this: site: xxx.xxxfiletype: xls.

(4) Reptiles (AWVS, Burpsuite, Polar Bear, etc.)

(5) BBSCAN (script of Li Jiejie: https://github.com/lijiejie/BBScastorn)

(6) Ling Fengyun search: https://www.lingfengyun.com/ (some users may upload cloud disks and be crawled online).

(7)github search

Copyright Description:No reproduction without permission。

Knowledge sharing community for developers。

Let more developers benefit from it。

Help developers share knowledge through the Internet。

Follow us